Cache

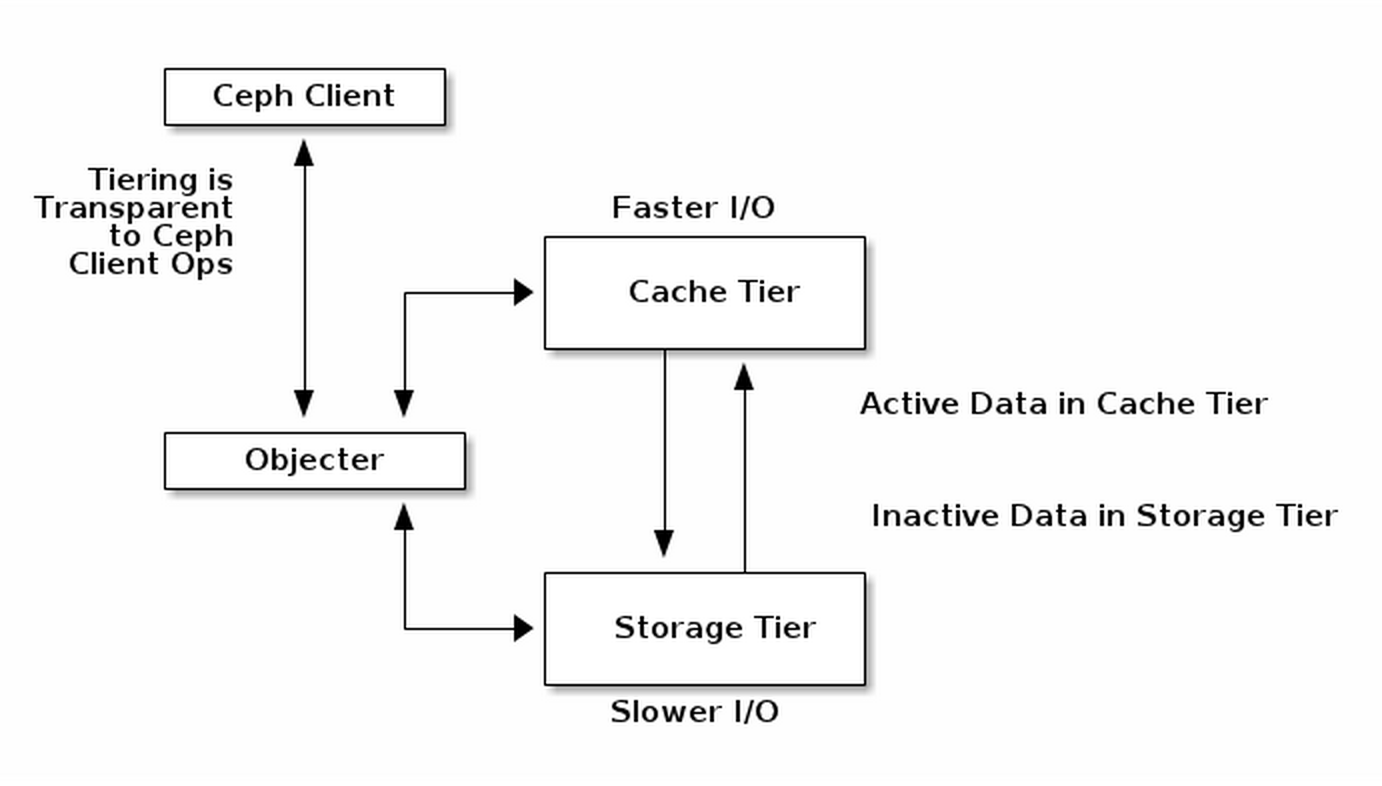

tiering divides the data cluster so that hot data being accessed

regularly can be held on faster storage, typically SSD's, while

erasure-coded cold data sits below on cheaper storage media. Image

credit: Ceph.

new market for Ceph.

While Ceph gained prominence as the open source software-defined storage tool commonly used on the back end of OpenStack deployments, it's not strictly software for the cloud. With the latest new enterprise feature addition, Ceph has begun to see adoption among a new class of users interested in software-defined storage for big data applications.

The new enterprise features can be used in both legacy systems and in a cloud context, “but there's almost a third category of object storage within an enterprise,” said Sage Weil, Ceph project leader, in an interview at OSCON. “They're realizing that instead of buying expensive systems to store all of this data that's relatively cold, they can use software-defined open platforms to do that.”

“It's sort of cloudy in the sense that it's scale out,” Weil said, “but it's not really related to compute; it's just storage.”

Two Important New Features

Ceph Enterprise 1.2 contains erasure coding and cache-tiering, two features first introduced in the May release of Ceph Firefly 0.8. Erasure coding can pack more data into the same amount of space and requires less hardware than traditional replicated storage clusters, providing a cost savings benefit to companies that need to keep a lot of archival data around. Cache tiering divides the data cluster so that hot data being accessed regularly can be held on faster storage, typically SSD's, while erasure-coded cold data sits below on cheaper storage media.Used together, erasure coding and cache tiering allow companies to combine the value of storing relatively cold, unused data in large quantities, with faster performance – all in the same cluster, said Ross Turk, director of product marketing for storage and big data at Red Hat.

It's a set of features that are both useful in a cloud platform context as well as in standalone storage for companies that want to benefit from the scale-out capabilities that the cloud has to offer but aren't entirely ready to move to the cloud.

“In theory it's great to have elastic resources and move it all to the cloud, but training organizations to adapt to that new paradigm and have their own ops teams able to run it, takes time,” Weil said.

Appealing to big data users

OpenStack was a good first use case for Ceph to target because developers and system administrators on those projects understand distributed software, Weil said. Similarly, a greenfield private cloud deployment is a good use case for Ceph because it's easy to stand up a new storage system at the same time “rather than attack legacy use cases head on,” he said.But enterprise private and hybrid cloud adoption still lags behind public cloud use, according to two recent reports by IDC and Technology Business Research. One reason is that most companies lack the internal IT resources and expertise to move a significant portion of their resources to the cloud, according to a March 2014 enterprise cloud adoption study by Everest Group.

Storage faces an even longer road to adoption than the cloud, given the high standards and premium that companies place on retaining data and keeping it secure.

“People require their storage to be a certain level of quality and stability – you can reboot a server but not a broken disk and get your data back,” Turk said.

By providing an economic advantage to users in the growing cold storage market, Ceph has the added benefit of encouraging enterprise adoption of open source storage in the short term without relying on cloud adoption to fuel it.

The path to the open source data center

Over the long term, cloud computing and the software-defined data center – including storage, compute, and networking – will become the new paradigm for the enterprise, Weil said. And Ceph, already a dominant open source project in this space, will rise along with it.“A couple of decades ago you had a huge transformation with Linux going from proprietary Unix OSes sold in conjunction with expensive hardware to what we have today in which you can run Linux or BSD or whatever on a huge range of hardware,” Weil said. “I think you'll see the same thing happen in storage, but that battle is just starting to happen.”

Red Hat's acquisition of Inktank will help shepherd Ceph along that path to widespread enterprise adoption -- starting with this first Ceph Enterprise release. Ceph will also eventually integrate with a lot of the other projects Red Hat is involved with, Weil says, including the Linux kernel, provisioning tools, and OpenStack itself.